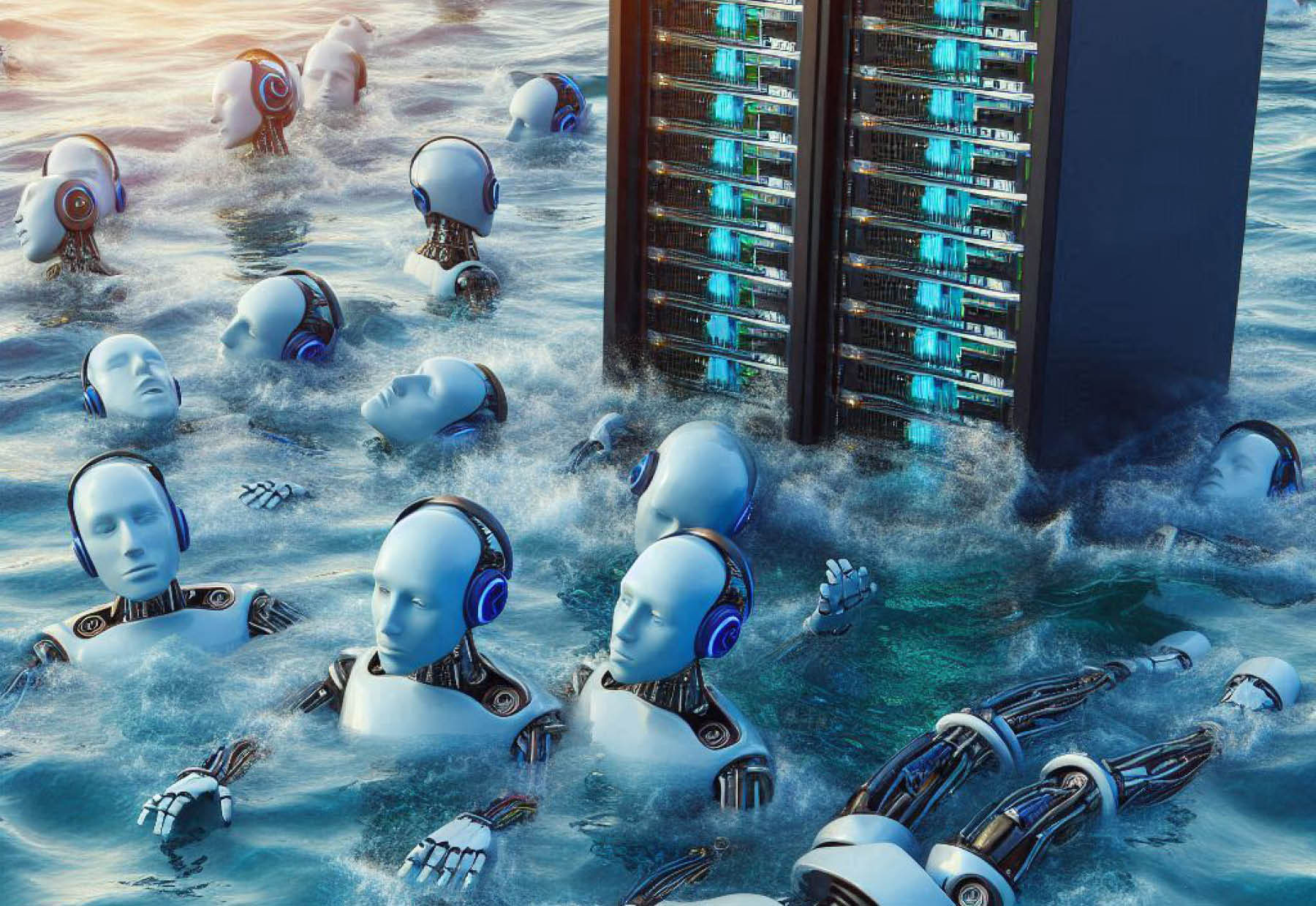

The Impact Exchange: Is AI the new plastic?

You’ve cut single-use plastic. You’ve offset your flights. Maybe you even drive an EV. But when it comes to your digital footprint, there’s a growing blind spot many sustainability strategies overlook: AI.

Each time you prompt an AI model like ChatGPT, you’re not just generating a lightning-fast response. You’re also consuming up to 500 milliliters of water – the equivalent of one water bottle – to cool the servers behind the screen.

Other estimates say training large language models produce the same carbon emissions as five petroleum-powered cars over their lifetimes. And that’s just today. With AI use accelerating across the globe, responsible tech usage must be part of the equation.

Each time you prompt an AI model like ChatGPT, you’re not just generating a lightning-fast response. You’re also consuming up to 500 milliliters of water.

"AI has been marketed as immaterial and magic – just code in the cloud – but it is driven by infrastructures that require massive amounts of energy, water and minerals while relying on undervalued labor globally," Kate Crawford, globally renowned AI scholar and one of Time magazine’s 100 most influential people in AI, tells The CEO Magazine.

"Many leaders are captivated by the promises of scale and profit while outsourcing the true costs to other nations and future generations. These hidden effects remain invisible in boardrooms because they are excluded from the models of value used in AI’s growth narratives."

Sustainability with visibility

According to Crawford, real sustainability in AI starts with full transparency. In practice, that means clearly disclosing the water, energy and emissions footprint of AI systems just as is done with vehicles and appliances. But few leaders are asking these questions, she points out. And even fewer are demanding accountability.

"Until leaders expand what they measure as ‘success’, AI will continue to operate as a planetary-scale system that hides its most consequential impacts," she says.

For business leaders racing to implement AI tools without fully understanding their environmental cost, Crawford says now is the time to rethink the trade-offs.

"We know the sustainability ratings of our washing machines – why not technology as significant as AI?" she asks.

Replacing legacy waste

While the environmental concerns are real, AI is not inherently unsustainable. When deployed responsibly, it can help eliminate older, more damaging systems.

That’s the case for Eagle Eye, a global marketing tech company helping retailers digitize engagement while reducing waste. Jonathan Reeve, Vice President of Eagle Eye's Asia–Pacific region, shares how a collaboration with leading French grocer E.Leclerc replaced printed catalogs with a fully digital flyer.

"Our AIR platform eliminates the need for paper-based marketing, like traditional weekly catalogs still used by many retailers," Reeve explains. "That reduces deforestation, water consumption and greenhouse gas emissions."

With AI use accelerating across the globe, responsible tech usage must be part of the equation.

In this instance, he points out that AI isn’t amplifying harm; it’s doing what technology should: solving problems without creating new ones. And that’s the standard more leaders will need to meet: using digital tools to decarbonize, not just digitize.

Saying ‘no’ to the hype

At asset management consultancy COSOL, CEO Scott McGowan is taking a similarly cautious stance. While AI presents clear opportunities, McGowan says his team isn’t getting swept up in the hype.

"Yes, our digital footprint reflects our sustainability values – largely because we’re not blindly chasing every AI trend," he says. "We’re taking a measured, whole-of-organization approach that prioritizes trust, value and long-term impact."

That includes resisting the pressure many leaders feel to rapidly adopt new AI tools without fully considering their implications.

"Trust in AI must be built – trust in security, in algorithms, in data," McGowan says. "And that trust must align with your company’s purpose and sustainability values."

Asking the harder questions

For Victoria McKenzie-McHarg, CEO of Women’s Environmental Leadership Australia, the conversation around AI must expand beyond productivity.

"We’re currently hearing a lot of positive narratives around the potential of AI, and rightly so," she says. "But we also need to grapple with the very real and significant ethical and environmental consequences of these tools."

The question is no longer whether AI will change the world – it already has. The real question now is how will it change.

McKenzie-McHarg points to concerns like embedded bias, corporate secrecy and the impact on water and energy systems. She cites DeepSeek, an emerging AI platform claiming improved energy and water efficiency, as an encouraging development – but one that prompts further scrutiny.

"Are these innovations being shared openly, or are commercial interests gatekeeping environmental progress?" she asks. "As leaders, we carry a moral and ethical responsibility to interrogate the impact of these technologies and not just accept them because they’re widely adopted."

AI greenwashing

The question is no longer whether AI will change the world – it already has. The real question now is how will it change. And, importantly, at what cost?

Some companies are now branding their models as more energy efficient or ‘water aware’. But Crawford warns we may be entering the era of AI greenwashing.

"We’re in a moment of the Jevons Paradox: when increases in efficiency paradoxically result in increased demand," she explains.

"Without clear, audited reporting of energy, water and emissions, claims of sustainability risk becoming reputational shields rather than genuine transformation."

Crawford draws a powerful parallel to the early days of plastic, which was initially hailed as a miracle innovation, only to later be revealed as an environmental disaster.

"Like plastic, AI systems are built for scale and convenience, making accountability fragmented and opaque," she says.

The future of AI

However, AI's harms are not just environmental. They are social and political as well, Crawford points out, shifting labor markets, governance systems and social inequalities while depleting physical resources.

"This layered complexity makes AI even more consequential than plastic in its long-term impacts if left unchecked," she notes.

The solution, she adds, isn’t to reject AI but rather to recalibrate it.

"The future of AI must be rooted in responsibility and accountability if it is to serve as a force for our collective flourishing," Crawford insists.

"A critical step forward is investing in AI systems that are smaller, more energy efficient and designed with a clear social purpose rather than endless growth. We also need leaders who ask hard questions about how AI will help and to design for what’s really needed – not just following the hype."